In this blog post, the aim is to provide a basis for discussion to promote cyber awareness in the healthcare sector, which is a highly specialized and sensitive field. The post sequentially addresses the general architecture of artificial intelligence (AI) models, the application of AI in disease detection, and emphasizes—through a sample scenario—how such models can be deceived. Although the post includes references to relevant literature, it should not be considered a scientific publication, as it has not undergone peer review. The primary objective is to offer readers a different perspective on cybersecurity in the context of healthcare.

AI Pipeline

Artificial intelligence (AI), a term coined by J. McCarthy in 1956, is a broad concept encompassing notions such as deep learning and machine learning. As one delves deeper into the AI domain, subfields such as image processing, speech processing, text processing, and natural language processing emerge—each requiring distinct areas of expertise. While these fields possess specific micro-level methodologies, there also exists a macro-level methodology applicable across the entire AI spectrum.

In 2020, Hapke and Nelson described the AI pipeline in the following sequence:

- Data ingestion

- Data validation

- Data preprocessing

- Model training – Model tuning

- Model analysis

- Model validation

- Model deployment

- Model feedback

This brief overview of the AI pipeline is crucial for readers to develop a foundational understanding, particularly in preparation for grasping the concept of AI model fallibility, which will be discussed in the next section.

AI-Based Diagnostics in Healthcare Services

Advancements in technology, the increasing global population and patient load, and the limited number of essential healthcare providers such as physicians and nurses have necessitated a transformation in traditional healthcare delivery (Hassmiller & Wakefield, 2022; Haleem et al., 2022). As a result of this compelled transition, opportunities for in-person diagnosis and treatment have relatively diminished, and the effective use of emerging health technologies has enabled remote diagnosis and treatment capabilities (Behar et al., 2020).

The journey, which began with disease prediction studies based on data repositories accumulated from electronic health records (EHRs), has since expanded into a wide array of applications—including early-stage cancer detection through image processing techniques, glaucoma diagnosis using ocular data, and identification of psychological disorders via patient notes. Moreover, fundamental transformations have occurred in the field of health insurance, such as behavior-based risk prediction, disease scoring, and AI-driven predictive analytics.

While these new techniques and technologies have initially been welcomed with enthusiasm as problem-solving tools, the inherent fallibility of AI models has also sparked various ethical debates.

AI-based disease prediction models operate within a specific confidence interval. However, a review of the literature reveals no consensus on the exact thresholds for these confidence intervals. Metrics such as confusion matrix, accuracy, and F1 score are frequently used to assess the reliability of models, yet establishing a standardized confidence range for each model remains elusive.

Accurate assessment of this uncertainty requires careful consideration of several key criteria, including the volume of data used, the proportion allocated to training and testing datasets, and the correct labeling of data in supervised learning models. Within this context, it is also plausible that models may be manipulated at various stages of the AI pipeline.

An Illustrative Scenario of AI Model Manipulation in Healthcare

The fundamental requirements for ensuring that artificial intelligence (AI) models operate within a reliable confidence range—along with the possibility of manual interventions at various stages of model development due to the nature of AI, and the potential for bias among model developers—can be cited as key grounds for possible manipulation of AI in healthcare. In this blog post, a sample scenario is developed to illustrate a potential system vulnerability that could be exploited (or hacked), stemming from human error such as oversight. The scenario is designed based on recent research data and reflects a plausible use case within the field of health insurance.

In a 2024 study conducted by Chiu et al., a deep learning model named EchoNet-Pericardium was developed using spatiotemporal convolutional neural networks to automate the grading of pericardial effusion severity and the detection of cardiac tamponade from echocardiographic videos.

The model was trained on a retrospective dataset comprising 1,427,660 video clips derived from 85,380 echocardiograms collected from patients at Cedars-Sinai Medical Center (CSMC). The system demonstrated high diagnostic performance, reporting an area under the curve (AUC) of 0.900 (95% CI: 0.884–0.916) for detecting moderate or greater pericardial effusion, 0.942 (95% CI: 0.917–0.964) for large pericardial effusion, and 0.955 (95% CI: 0.939–0.968) for cardiac tamponade.

Based on the information provided in the study, it is evident that the EchoNet-Pericardium model, trained on echocardiogram data obtained from actual patients, can detect certain significant cardiac conditions with high reliability.

However, it appears that the data used to develop the system were obtained from patients with hearts in the normal left-sided position. The model has not been tested on images from individuals with dextrocardia—a congenital condition where the heart is located on the right side—who live asymptomatically and normally.

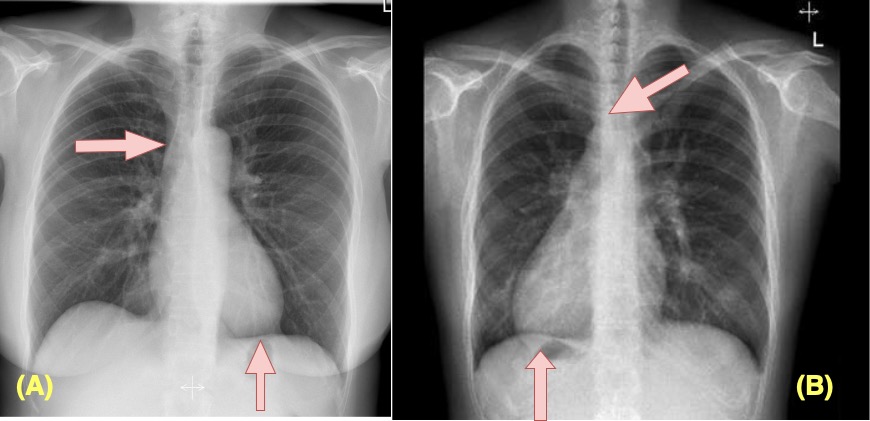

Figure 1 illustrates chest X-rays showing both left- and right-sided hearts, each representing normal (disease-free) anatomy.

Figure 1. Left-Sided and Right-Sided Heart Presentation (PACs)

Source: (A) Iino et al. (2012); (B) Radiopaedia (2023). The images were prepared using visuals obtained from the referenced studies a and b. The basis and apex parts of the heart are indicated with arrows to demonstrate a normal heart position and dextracardia.

The hypothetical scenario developed within this article presents an excellent example of the confusion that a right-sided (dextrocardia) image uploaded to the EchoNet model could cause. The model can be misled by a chest X-ray of an individual with congenital dextrocardia who does not have heart disease. Due to imperfect edge detection, the model may misinterpret this normal but rare anatomical variation as a pathological condition. If such an interpretation is used to automatically determine insurance risk, it could result in significant financial loss.

Results

New technical and technological solutions are being extensively implemented across various fields, including healthcare services. It is anticipated that the number of such applications will increase in the future. Given the inherent nature of AI-based algorithms, it is understood that they can be fallible and vulnerable to manipulation. Therefore, it is crucial for stakeholders developing similar applications in the future to consider all possible scenarios to address system vulnerabilities, minimize human error, and rigorously test systems from diverse perspectives.

Referances

Behar, J. A., Liu, C., Kotzen, K., Tsutsui, K., Corino, V. D., Singh, J., … & Clifford, G. D. (2020). Remote health diagnosis and monitoring in the time of COVID-19. Physiological measurement, 41(10), 10TR01.

Chiu, I. M., Vukadinovic, M., Sahashi, Y., Cheng, P. P., Cheng, C. Y., Cheng, S., & Ouyang, D. (2024). Automated Evaluation for Pericardial Effusion and Cardiac Tamponade with Echocardiographic Artificial Intelligence. medRxiv : the preprint server for health sciences, 2024.11.27.24318110. https://doi.org/10.1101/2024.11.27.24318110.

Haleem, A., Javaid, M., Singh, R. P., & Suman, R. (2022). Medical 4.0 technologies for healthcare: Features, capabilities, and applications. Internet of Things and Cyber-Physical Systems, 2, 12-30.

Hapke, H., & Nelson, C. (2020). Building machine learning pipelines. O’Reilly Media.

Hassmiller, S. B., & Wakefield, M. K. (2022). The future of nursing 2020–2030: Charting a path to achieve health equity. Nursing Outlook, 70(6), S1-S9.

Iino, K., Watanabe, G., Ishikawa, N., & Tomita, S. (2012). Total endoscopic robotic atrial septal defect repair in a patient with dextrocardia and situs inversus totalis. Interactive CardioVascular and Thoracic Surgery, 14(4), 476-478.

Radiopaedia. (2023, March). Chest (PA view). In Radiopaedia. Retrieved June 29, 2025, from https://radiopaedia.org/articles/chest-pa-view-1

Leave a Reply